Overview¶

Interpolation

Extrapolation, X-Casting

Variable Transformation

Feature Representation

Operator Learning

Interpolation¶

This is when everything is inside the convex hull of a spatial domain and the period of observations. From a spatial perspective, if we can draw a straight-line between the a query point of interest and two other reference points, then I would consider it an interpolation problem.

Examples:

Weather Stations Extremes

SST <--> SSH

MODIS <--> GOES <--> MSG

Extrapolation¶

X-Casting¶

This is the exclusive case when we are trying to predict outside of the period of interest.

HindCasting,

NowCasting,

ForeCasting,

Climate Projections, ,

Variable Transformation¶

Examples:

SST --> SSH

Satellite Instrument - 2 - Satellite Instrument

Feature Representation¶

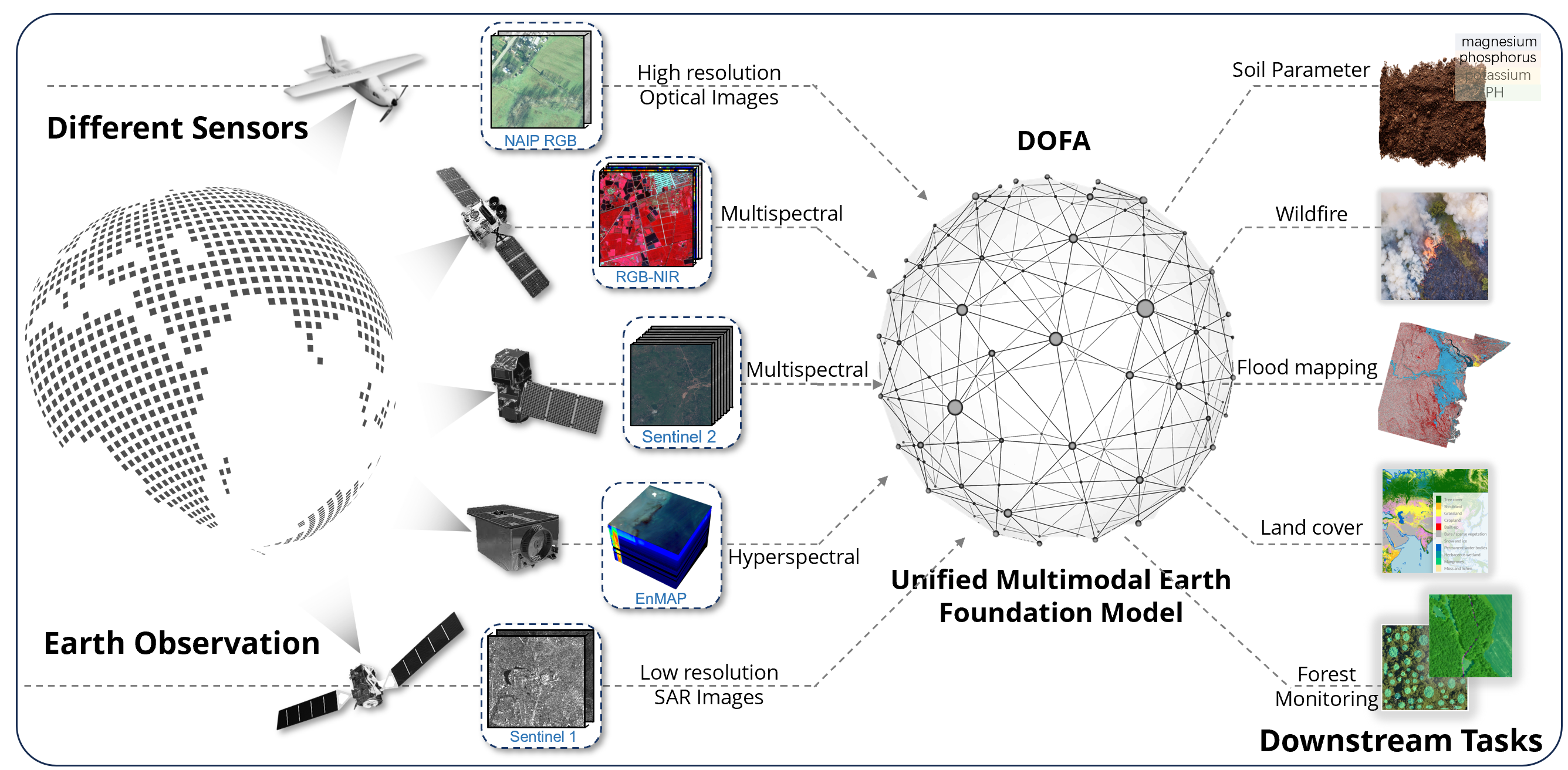

Figure 1:A Model from DOFA whereby they train their model on different modalities for Remote sensing data. Source: GitHub | Paper (arxiv)

This is also known as Representation Learning, Foundational Models

Strategies:

Data Augmentation, e.g., Small-Medium Perturbations

Masking

Examples:

PCA/EOF/POD/SVD

AutoEncoders

Bijective, Surjective, Stochastic

ROM -> AutoEncoders

Linear ROM -> PCA/EOF/POD/SVD, ProbPCA

Simple -> Flow Model

MultiScale -> U-Net

Operator Learning¶

Now, we have broken each of the different problem categories into different subtopics. However, we can easily have a case whereby we have each a single problem category or a combination of all problem categories. There is an umbrella term which encompasses all of the aforementioned stuff.

Now, we wish to learn some.

Normally, we can break this into steps. This is also known as lift and learn.

Learn a good representation network which encodes our data from an infinite domain into a finite latent domain.

Do the computations in finite dimensional space.

Learn a reconstruction function from the finite dimensional latent domain to another infinite dimensional domain.

Examples:

Unstructured <--> Irregular, e.g. CloudSAT + MODIS

Irregular <--> Regular, e.g., AlongTrack + Grid

Regular <--> Regular, e.g., GeoStationary I + GeoStationary II