Linearized GP¶

Recall the GP formulation:

Recall the posterior formulas:

In the case where we have uncertain inputs \mathbf{x} \sim \mathcal{N}(\mu_\mathbf{x}, \Sigma_\mathbf{x}), this function needs to be modified in order to accommodate the uncertainty

The posterior of this distribution is non-Gaussian because we have to propagate a probability distribution through a non-linear kernel function. So this integral becomes intractable. We can compute the analytical Gaussian approximation by only computing the mean and the variance of the posterior distribution wrt the inputs.

The variance term is a bit more complex.

Taylor Approximation¶

Taking complete expectations can be very expensive because we need to take the expectation wrt to the inputs through nonlinear terms such as the kernel functions and their inverses. So, we will approximate our mean and variance function via a Taylor Expansion. First the mean function:

and then the variance function:

Note

To see more about error propagation and the relation to the mean and variance, see here.

So expanding these equations gives us the following:

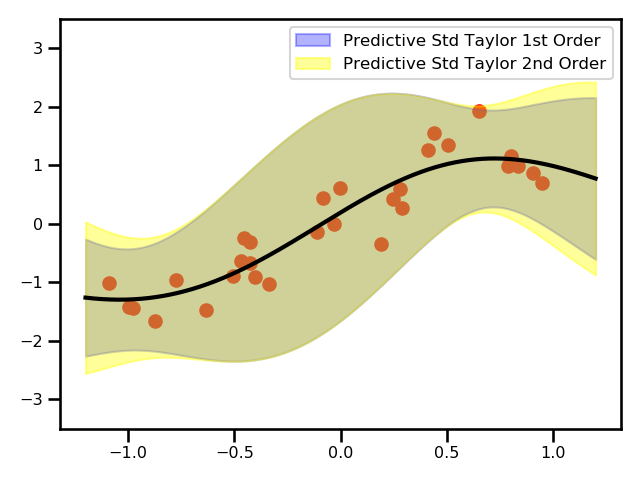

where \nabla_x is the gradient of the function f(\mu_x) w.r.t. x and \nabla_x^2 f(\mu_x) is the second derivative (the Hessian) of the function f(\mu_x) w.r.t. x. This is a second-order approximation which has that expensive Hessian term. There have have been studies that have shown that that term tends to be neglible in practice and a first-order approximation is typically enough.

Practically speaking, this leaves us with the following predictive mean and variance functions:

As seen above, the only extra term we need to include is the derivative of the mean function that is present in the predictive variance term.

Examples¶

1D Demo

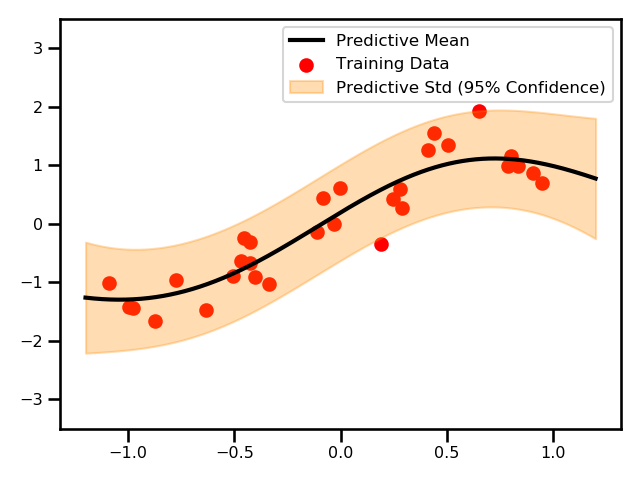

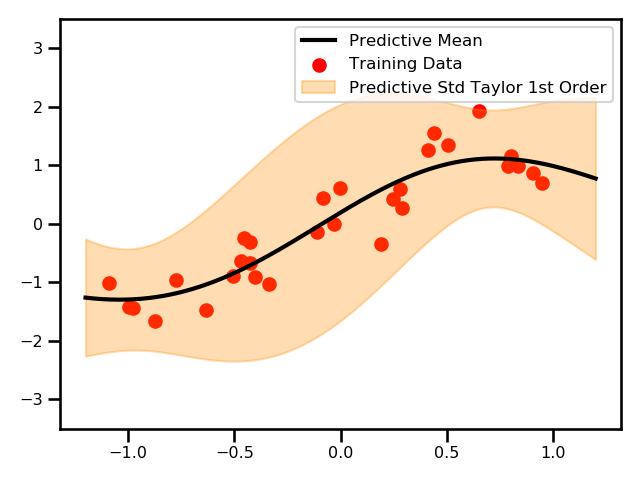

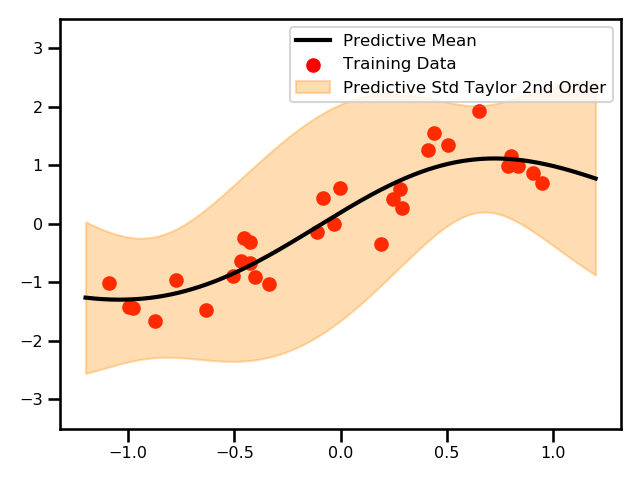

Here, we see a plot for the differences between the two GPs.

Satellite Data

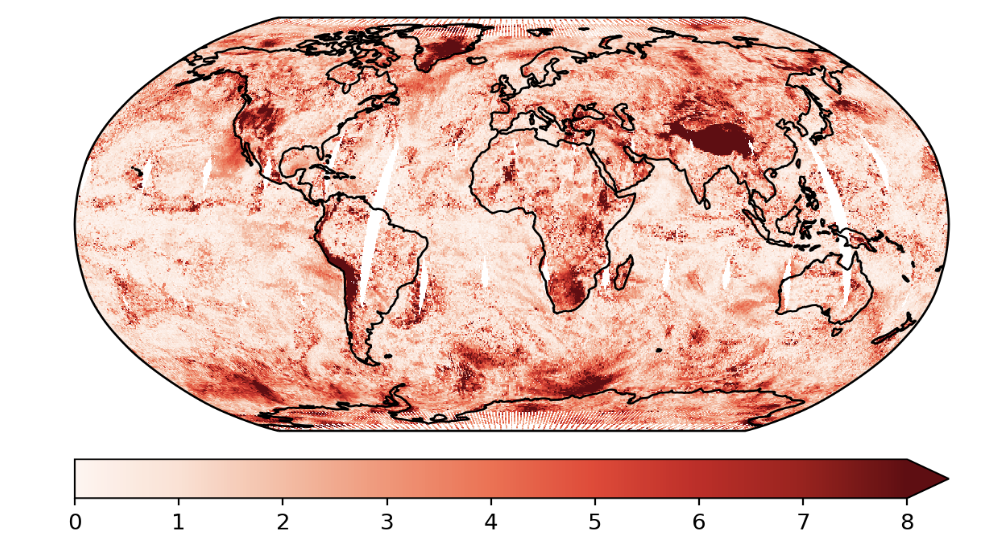

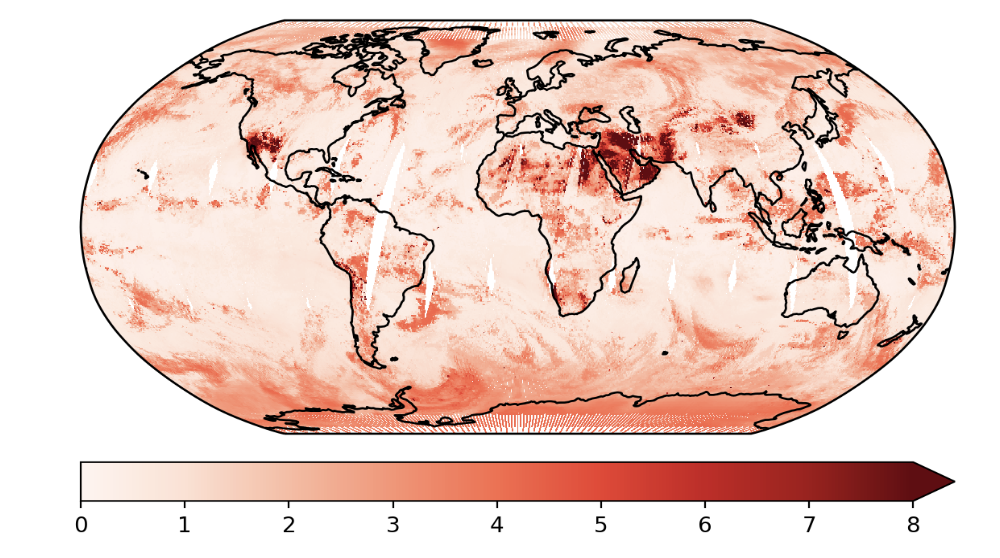

These are the predictions using the exact GP and the predictive variances.

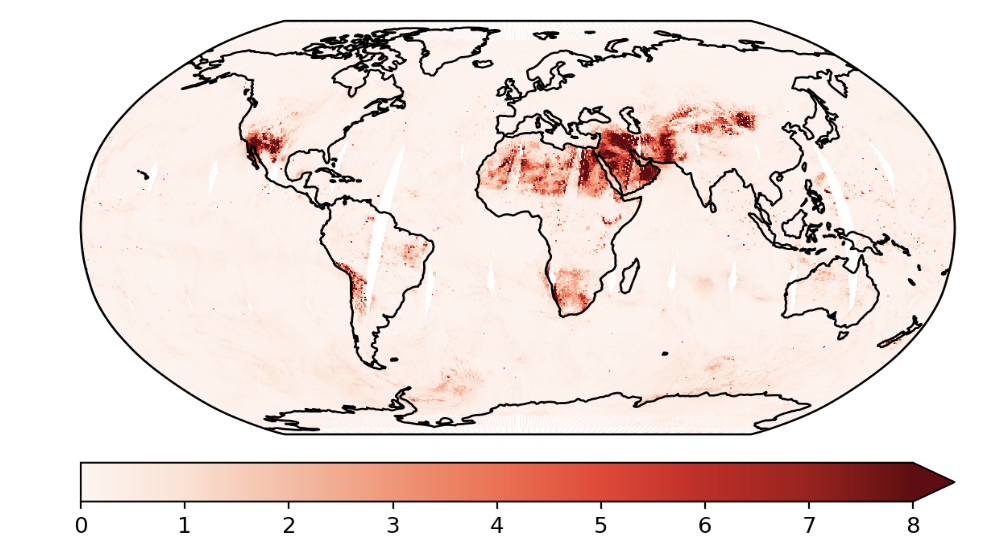

This is an example where we used the Taylor expanded GP. In this example, we only did the first order approximation.

Sparse GPs¶

We can extend this method to other GP algorithms including sparse GP models. The only thing that changes are the original \mu_{GP} and \nu^2_{GP} equations. In a sparse GP we have the following predictive functions

So the new predictive functions will be:

Practically speaking, this leaves us with the following predictive mean and variance functions:

As shown above, this is a fairly extensible method that offers a cheap improved predictive variance estimates on an already trained GP model. Some future work could be evaluating how other GP models, e.g. Sparse Spectrum GP, Multi-Output GPs, e.t.c.

Literature¶

Theory

- Bayesian Filtering and Smoothing - Smio Sarkka ()- Book

- Modelling and Control of Dynamic Systems Using GP Models - Jus Kocijan () - Book

Applied to Gaussian Processes

- Gaussian Process Priors with Uncertain Inputs: Multiple-Step-Ahead Prediction - Girard et. al. (2002) - Technical Report

Does the derivation for taking the expectation and variance for the Taylor series expansion of the predictive mean and variance.

- Expectation Propagation in Gaussian Process Dynamical Systems: Extended Version - Deisenroth & Mohamed (2012) - NeuRIPS

First time the moment matching and linearized version appears in the GP literature.

- Learning with Uncertainty-Gaussian Processes and Relevance Vector Machines - Candela (2004) - Thesis

Full law of iterated expectations and conditional variance.

- Gaussian Process Training with Input Noise - McHutchon & Rasmussen et. al. (2012) - NeuRIPS

Used the same logic but instead of just approximated the posterior, they also applied this to the model which resulted in an iterative procedure.

- Multi-class Gaussian Process Classification with Noisy Inputs - Villacampa-Calvo et. al. (2020) - axriv

Applied the first order approximation using the Taylor expansion for a classification problem. Compared this to the variational inference.